This article is written based on the lecture MATH532 by Prof. Hwang at POSTECH.

What is Gradient Descent Algorithm

In machine learning, we usually have to find a way to minimize a traget function (e.g. MSE). How can we find the minimum of the target function if it is too dizzy to find the minimum? Think about the basic calculus which you learned in your freshman. There was a notion called “Gradient,” which points the dirction of the steepest slope. Its magnitude also means the rate of increase or decrease of the function in the direction. Therefore, gradient is very of use to find minimum or maximum valu in that it points where we have to go to find our destination. Of course, we have to follow the direction where gradient points. This can be written as

where and is a loss function.

Limitations

https://www.reddit.com/r/ProgrammerHumor/comments/eez8al/gradient_descent/

Simple gradient descent alogrithm has several limitations.

-

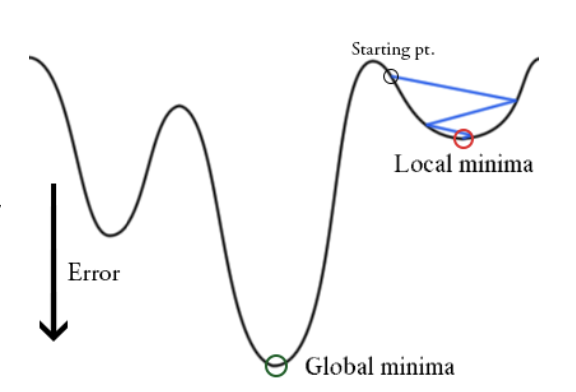

If the starting point for gradient descent was chosen inappropriately, the algorithm will only make it approach a local minimum, never reaching the global one.

-

We need to compute for all to perform one update. Hence, it can be very slow and is intractable for datasets that don’t fit in memory.

Stochastic Gradient

One method to overcome the limitations alluded before is the stochastic gradient descent. It is literally the stochastic approximation of gradient descent optimization.

We select data size from all sample size . Then we can iteratively calculate parameter where the loss function is being minimized. This can reduce the computational burden. Also, there is a fluctuation in the objective function since we select the data randomly. This fluctuation enables it to jump to new and potentially better local minima.

Momentum Methods

This is the most interesting algoritm in the class today! When we drop the ball on the complex loss function with a proper friction, the ball is likely to pass through the local minima and arrive at global minima. This is becuase as ball moves downward, its potential energy transform to the kinetic energy so that it gains the energy to pass through the local minima. Momentum Methods are made inspired by this physical phenomenon. The name momentum comes from the fact that the negative gradient is a force moving a particle through parameter space. (although it is not exactly the same as that found in classical momentum.)

The algorithm can be expressed with equations as below.

Here, plays a role as a friction. (Although not exactly same.) It is a method that helps acclerate SGD (Sotchasstic Gradient Descent) in the relevant direction and dempens oscillations. Also, it can hlep to escape local minima.

Nestrov Accelerated gradient

Momentum method adjusts the momentum based on current position . On the other hand, Nestrov Acclerated gradient based on approximated future position .

Adaptive Method

AdaGrad

As I alluded before, in order to arive at global minimum, not local minimum, we need to have appropriate parameter . Too small can cause too slow convergence and too small can cause too much fluctuation to converge. Also having a constant is not suitable in the complex loss function.

AdaGrad (Adaptive Gradient) is the algorithm that djust learning rate automatically. It updates more for infrequent parameter and less for frequent parameter. The algorithm can be written as

As the accumulated gradient gets bigger, the learning rate gets smaller.

RMSProb

AdaGrad has some weaknesses because denominator . Since increases monotonically, the learning rate becomes shrink and eventually becomes very small so the algorithm can not learn anymore. If we change the summation of into the average of , the problem can be solved. RMSProb average previous and current with the weight and respectively.

where

and

AdaDelta

AdaDelta is another extension of AdaGrad. AdaDelta not only focuses on the denomiantor but also numerator.

and

Adam

Adam is the algorithm that mixes RMSProp and Momentum. It was proposed by KIngma &Ba. It not only keeps the average of past squared gradients like Adadelta and RMSProp, but also keep the average of past gradients. Since inital average are biased towards zero, Adam adjusts initial averages to be unbiased.

and

Code

You can get the gradient descent code I wrote from scratch.

https://github.com/MunjungKim/Gradient-Descent-Algorithm

Reference

-

Hastie, T., Tibshirani, R., & Friedman, J. (2009). The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Second Edition. New York: Springer Series in Statistics

-

James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An introduction to statistical learning (Vol. 112). New York: springer.